How do birds interact with solar farms? Solar energy development could impact birds, but field surveys of bird carcasses do not provide sufficient data to accurately understand the nature and magnitude of impact. Because data collection does not take place in real time, these methods cannot observe bird behavior around solar panels that could indicate causes of fatality, sources of attraction, and potential benefits to birds.

In collaboration with Argonne’s Strategic Security Sciences and Mathematics and Computer Science divisions, EVS is developing a technology to continuously collect data on avian-solar interactions (e.g., perching, fly-through, and collisions) in a project funded by the U.S. Department of Energy (DOE) Solar Energy Technologies Office (SETO). The system incorporates a computer vision approach supported by machine/deep learning (ML/DL) algorithms and high-definition edge‑computing cameras. The avian monitoring technology will improve the ability to collect a large volume of avian-solar interaction data to better understand potential avian impacts associated with solar energy facilities.

How Does the System Work?

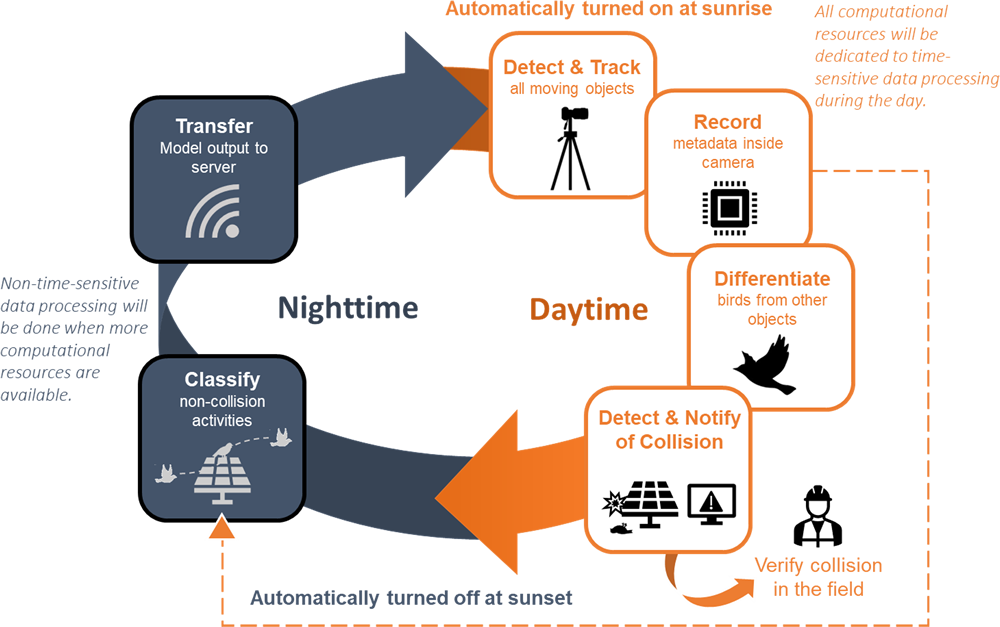

Our avian monitoring camera turns on automatically around sunrise, continually monitors birds during the day, and turns off around sunset by itself.

During the day, our camera continually detects moving objects as soon as they enter the view and tracks them until they leave the view or stop moving. The camera records the speed, trajectory, and image sequence of each object. The camera detects birds among those objects and determines if they collided with panels or they didn’t. When a collision is detected, the camera notifies users shortly after the event.

During the night, the camera classifies bird activities recorded during the day into 5 types: fly-over, fly-through, perch on panel, land on ground, perch in background. The camera then sends the output, including time and locations of bird detection and their activities to the server. Once completed, the camera discards the data to clear space for the next day.

Detection, tracking, and classification are done by our AI models. All processes are done inside the camera (known as edge-computing).

How Is AI Used in the Camera?

The camera incorporates four AI models to monitor birds. We utilize a background subtraction algorithm known as a Gaussian Mixture Model to detect moving objects; a multiple instance learning model that takes the entire sequence of frames as input to classify moving objects; a hybrid ML model to detect collisions; and a Fusion bidirectional Long Short-Term Memory model to classify bird activities.